Readings on AI’s Impact on Productivity

Evidence of the Effects of AI on Knowledge Worker Productivity and Quality

From Ethan Mollick, one of the authors of the paper:

BCG consultants (n = 758, 7% of workforce) using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without.

We also found something else interesting, an effect that is increasingly apparent in other studies of AI: it works as a skill leveler. The consultants who scored the worst when we assessed them at the start of the experiment had the biggest jump in their performance, 43%, when they got to use AI.

Fabrizio Dell’Acqua shows why relying too much on AI can backfire. In an experiment, he found that recruiters who used high-quality AI became lazy, careless, and less skilled in their own judgment. They missed out on some brilliant applicants and made worse decisions than recruiters who used low-quality AI or no AI at all. When the AI is very good, humans have no reason to work hard and pay attention. They let the AI take over, instead of using it as a tool. He called this “falling asleep at the wheel”, and it can hurt human learning, skill development, and productivity.

Optimize team management in minutes with ManageBetter. Start your free trial now and join Uber and Microsoft in boosting performance, gathering insights, and generating reviews—all AI-powered, no writing required.

Experimental evidence on the productivity effects of generative artificial intelligence

From the paper’s abstract:

…we assigned occupation-specific, incentivized writing tasks to 453 college-educated professionals and randomly exposed half of them to ChatGPT. Our results show that ChatGPT substantially raised productivity: The average time taken decreased by 40% and output quality rose by 18%. Inequality between workers decreased, and concern and excitement about AI temporarily rose. Workers exposed to ChatGPT during the experiment were 2 times as likely to report using it in their real job 2 weeks after the experiment and 1.6 times as likely 2 months after the experiment.

The Impact of AI Tool on Engineering at ANZ Bank

Summary from Leadership in Tech:

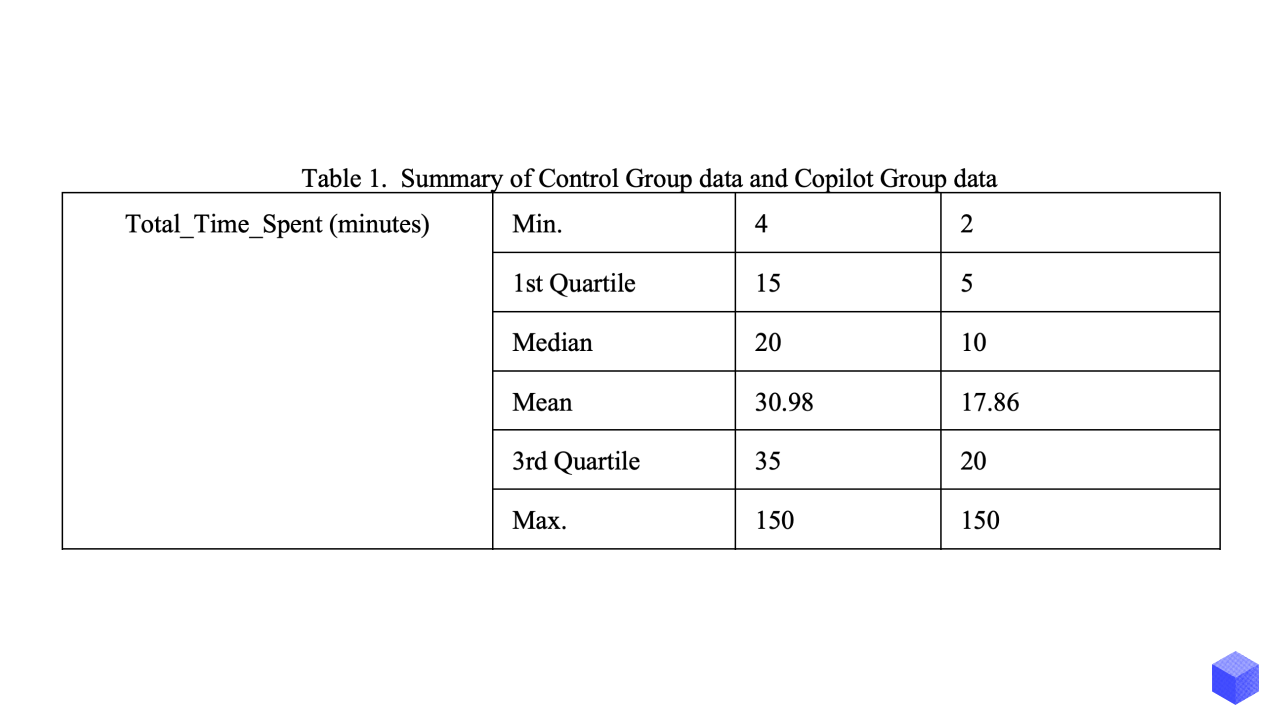

The group that had access to GitHub Copilot was able to complete their tasks 42.36% faster than the control group participants. This result is statistically significant. The code produced by Copilot participants contained fewer code smells and bugs on average, meaning it would be more maintainable and less likely to break in production.

And more from Abi Noda:

Copilot was beneficial for participants of all skill levels, but it was most helpful for those who were ‘Expert’ python programmers.

The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

From Microsoft:

The treatment group, with access to the AI pair programmer, completed the task 55.8% faster than the control group.

Further, the paper claims that the following benefited from AI the most:

Developers with less experience

Older programmers

Those coding more hours per day benefited the most

Assessing the Quality of GitHub Copilot’s Code Generation

The study assesses GitHub Copilot’s code generation quality, finding a 91.5% success rate in generating valid code. Correctness was achieved in 28.7% of cases, with 51.2% partially correct.

Sharpen Your Leadership Edge: Join 3,000+ executives receiving weekly, actionable insights from industry experts. Subscribe free to The Thoughtful Leader and elevate your team's performance.